In this article, we are going to explain how load balancing is going to benefit your cloud environment and why it’s a must for a lot of businesses, because it can play an important role in maintaining the availability of your cloud-based app to customers, business partners, and end users, but before that, first we shortly introduce what it actually is.

What is load balancing?

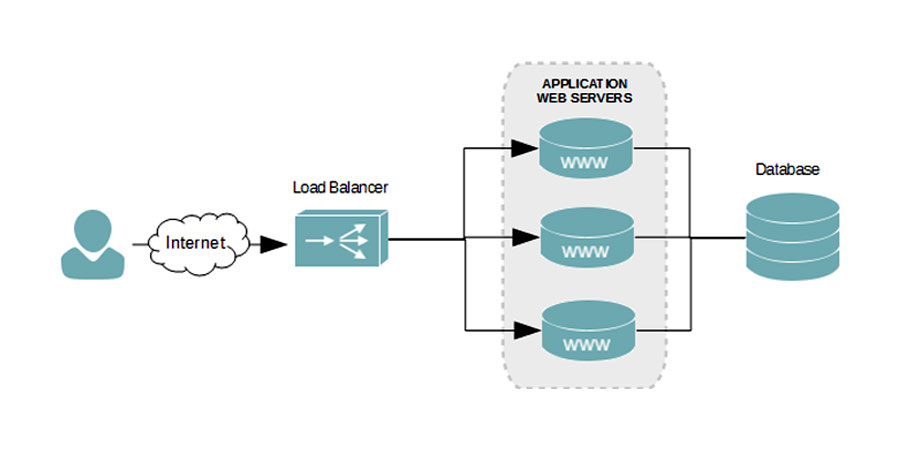

Load balancing is the process of distributing workloads across multiple servers. A load balancer is a server that performs this action. The main purpose of load balancing is to prevent any single server from getting overloaded and possibly breaking down. Simply put, load balancing improves service availability and helps prevent downtimes in your business.

When the amount of workload an individual server receives is within its acceptable levels, it would in turn have sufficient computing resources (e.g. CPU, RAM and Disk Space) to process requests within acceptable response times. Fast response times are vital to end user satisfaction and productivity. When you ran out of available resources in a particular server, you can either upgrade it or you can add more servers and distribute the load across them.

Why do you need load balancing?

There are three important issues that load balancers were made to address: performance, availability, and economy. Load balancing can be a solution to hardware and software performance.

Segregation of tasks is key to the function of a load balancer. Whether it is CPU load, network load, or even memory capacity required to complete a task in your server.

The benefits of load balancing

The demand for quick complicated software is constantly pushing the bounds of machines. Your users want your services to be fast and reliable, and you want to provide them quality service with the highest ROI. Load balancing can bring lots of benefits to your business, here we will review the important ones.

Handling Traffic Surges: When there is a surge in traffic or when a particular server is getting more requests than the other, the load balancer steps in, and delegates the load to different, freer servers. When the load balancer sees that server A is getting more requests than others, it’ll simply distribute those extra requests to server B and C. A load balancer can help manage the traffic follow by spreading the workload over multiple computers or servers even in different regions. This will prevent the network from slowing down and effecting work processes.

High Availability and More Redundancy: Machine failure happens. You should avoid single points of failure whenever possible. A load balancer can prevent your app from failing if a server in your cluster fails. This process is known as redundancy. When setting up your network or upgrading your network, create server clusters using the same application. This will allow you to remove a failing server from your system effortless. It will also allow you to transfer its current workload to a functioning server without any down time.

Lower Server Costs: You could buy the latest and greatest servers from Dell or HP every year to keep up with the growing demand of your user base, and you could buy a second one to protect yourself from assured failure, but this gets expensive. There are some cases where scaling vertically is the right choice, but for the vast majority of web application workloads it’s not an economical procurement choice. Usually, the more powerful a machine is when it is released, it will be considered more of a premium and you will be charged more for its capacity.

Better scalability: Load balancing plays a key role in a cloud’s scalability. By nature, cloud infrastructures are supposed to easily scale up to accommodate any uptick or surge in traffic. When a cloud “scales up”, it typically spins up multiple virtual servers and runs multiple application instances. The main network component responsible for distributing traffic across these new instances is/are the load balancer(s).

Update without fear of Downtime: Load balancing adds flexibility to your network and this will make updating your app and codes easier with less frictions. You load balancer can shift the workload to a set server or cluster of servers while updating the rest. This will prevent updates from effecting accessibility to data or services to clients.

Protect your brand: If one region becomes nonoperational due to a natural calamity like a catastrophic earthquake, flood, or tsunami, load balancers can direct traffic to regions that haven’t been affected by the calamity. The protection against disasters strengthens your brand’s reliability image, which is priceless.

Type of Load Balancers

Depending on the load balancing algorithms they support, load balancers may even be able to determine if a certain server (or set of servers) is likely to get overloaded more quickly and redirect traffic to other nodes that are deemed healthier. Proactive capabilities like this can significantly reduce the chances of your cloud services becoming unavailable.

One way to load balance is through the Domain Name System (DNS), which would be considered client side. Another would be to load balance on the server side, where traffic passes through a load balancing device that distributes load over a pool of servers. Both ways are valid, but DNS and client side load balancing is limited, and should be used with caution because DNS records are cached according to their time-to-live (TTL) attribute, and that will lead your client to non-operating nodes and produce a delay after changes. Server-side load balancing is powerful, it can provide fine-grain control, and enable immediate change to the interaction between client and application.

Server-side load balancers have evolved from simply routing packets, to being fully application aware. These are the two types of load balancers known as network load balancers and application load balancers.

The application load balancers are where there are interesting advancements. Because the load balancer is able to understand the packet at the application level, it has more context to the way it balances and routes traffic. An application load balancer is a great place to add another layer of security, or cache requests to lower response times.

Network load balancers are great for simply and quickly distributing load. Application load balancers are important for routing specifics, such as session persistence and presentation.

Round Robin: Round Robin balancing is the simplest type of load balancing there is. The load balancer collects a request and simply sequentially hands it out to all the servers in the network. Because of this rather simple and straightforward assigning technique, Round Robin is the easiest type of load balancer to implement.

Least Connections: With this type of balancing, the load balancer will take a cursory glance at all the servers in a network and then sends the request to the server with the least requests or connections. It’s important to note that whenever there’s a new request, the load balancer assesses the entire network. Because of this constant refreshing, this type of load balancing is popular with websites or networks that typically have long sessions per request or connection.

Source IP hash: Source IP hash technique is a little more than just a load balancer. It also acts as a tool that helps you continue a session even if there’s a broken connection. Whenever there’s a request, the algorithm records the IP address of the client. Based on this IP address, a unique key, called a hash key is generated. Then the request is distributed to the relevant server using the Least Connections principle. With a Source IP hash, the network knows exactly when you lost a connection and what server was catering to you. The load balancer simply looks at your key and assigns you to the same server as before. This way, your connection, though broken, can continue without any hassle. This technique is also widely used in the e-commerce industry.

Conclusion

Load balance is a catchphrase being thrown around these days but fanciness aside, load balancing is a process that organization should undertake to ensure that your network is functioning effectively, efficiently and optimally.

As you deliver more services through your cloud infrastructure, you can expect an exponential increase in traffic. In order to scale your infrastructure to support the increasing demand as well as maintain acceptable levels of responsiveness and availability, you need to make sure you incorporate load balancing into your cloud endeavors.