Nvidia goes after Intel’s datacenter market share by introducing Grace CPU, an ARM based CPU and server architecture designed for Accelerated Computing, AI and HPC workloads. The chip is named after the computer science Grace Hopper, One of the first programmers of the Harvard Mark I computer, she was a pioneer of computer programming who invented one of the first linkers. The name Grace is fitting for Nvidia’s new CPU because it specifically is targeted toward applications like AI training on massive NLP (Natural Language Processing) models “with more than 1 trillion parameters,” as Nvidia classifies it.

NVIDIA claims Grace can deliver up to 30 times higher aggregate bandwidth than today's fastest servers, and up to 10 times higher performance for applications that process terabytes of data. It aims to enable scientists and researchers to train the world's largest models to solve the most complex problems.

NVIDIA's move from datacenter GPUs to CPUs isn't surprising, but it could raise some concerns for Intel, which still controls the vast majority of the server CPU market. Nvidia representatives were careful to point out that Grace is not designed to compete head-to-head with Intel Xeon and AMD EPYC data center CPUs. Instead, Grace is “designed to be tightly coupled with an Nvidia GPU to remove bottlenecks for the most complex giant model AI and HPC applications.”

Intel is losing to Desktop and Laptop market to AMD and ARM and Nvidia’s latest move is another punch to Intel’s market share. Intel currently is facing an ongoing chip shortage as its internal foundry fell behind TSMC in the race to manufacture smaller chips. AMD, which outsourced its chip production to TSMC instead of manufacturing them internally, pulled ahead of Intel with more advanced chips.

Grace will be built on a bleeding-edge 5nm process node and Nvidia also announced that the CPU’s first deployment at the beginning of 2023 will be in the Swiss National Supercomputing Center’s (CSCS) Alps system.

In the recent years, Nvidia tries to associate its high-end GPUs to Artificial intelligence and machine learning tasks. A CPU processes pieces of data individually, while GPUs process a large array of integers and floating-point numbers simultaneously. Together, CPUs and GPUs process AI tasks much faster than stand-alone CPUs or GPUs.

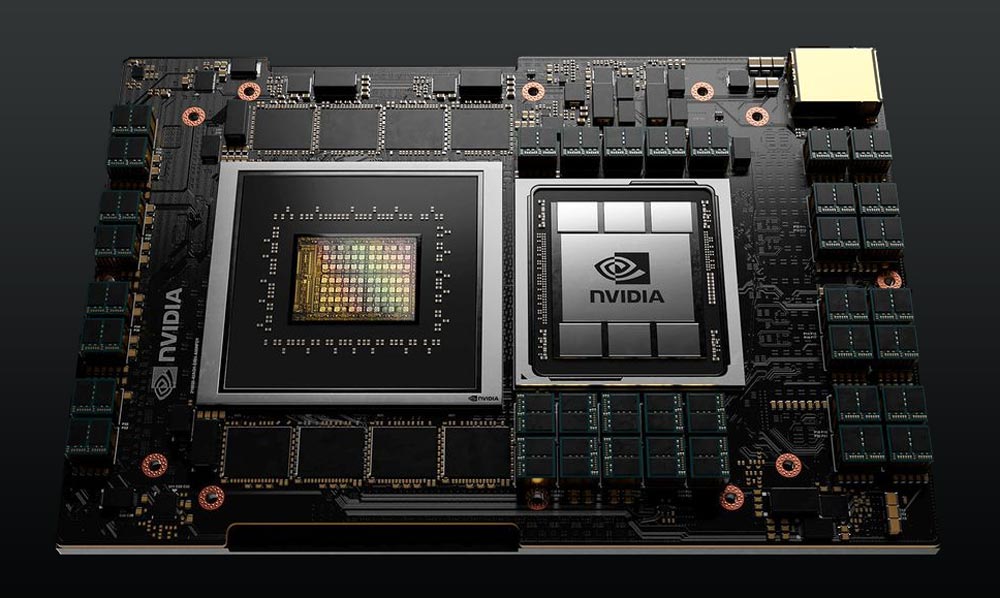

Solving the largest AI and HPC problems requires both high-capacity and high-bandwidth memory (HBM). The fourth-generation NVIDIA NVLink delivers 900 gigabytes per second (GB/s) of bidirectional bandwidth between the NVIDIA Grace CPU and NVIDIA GPUs. The connection provides a unified, cache-coherent memory address space that combines system and HBM GPU memory for simplified programmability. This coherent, high-bandwidth connection between CPU and GPUs is key to accelerating tomorrow’s most complex AI and HPC problems.

As the parallel compute capabilities of GPUs continue to advance, workloads can still be gated by serial tasks run on the CPU. A fast and efficient CPU is a critical component of system design to enable maximum workload acceleration.

NVIDIA also announced a partnership with Amazon Web Services to bring its GPUs together with AWS's ARM-based Graviton2 processor. That arrangement shows just how flexible the company can be, and boosting another ARM-based processor can only hurt Intel more. Those NVIDIA-powered AWS instances will be able to run Android games natively, the company says, as well as stream games to mobile devices and accelerate rendering and encoding.

It's unclear if NVIDIA's Grace CPUs will gain any ground against Intel's industry-standard Xeons in the datacenter market. However, it represents a bold step in the right direction and sparks fresh hope for Arm-based CPUs to make progress in the datacenter market again. Given the growing importance of energy-efficient supercomputers, Grace has a good shot at being more immediately successful than NVIDIA's last ARM-based hardware, the Tegra SoC.